Data Perspectives & Representations

Failure of Software Design

Software systems process data. Either this is computation input/output or persistent data read from storage for processing. To minimize the effort and risk to realize a software solution - - many architects model the data based on the inputs and outputs. They just add some annotations on those models, and voila, it can be written and read from a database!

Software design is the process of minimizing the effort and risk of developing your software.

Unfortunately, they are not knowing that using the same data perspective for input/output and storage - or in other words, not using different data representations - is one of the biggest reason that the system will be a legacy system, and that needs to be replaced as soon as it is ready. :(

Software architecture is the process of making sure that future changes to the needs can be easily accommodated in your software.

To explain a bit more, the business needs are constantly changing, therefore the inputs and outputs of your system will change, and if you modeled this 1-on-1 to your database, your stored data is no longer compatible. The mistake here is that no architecture has been taken into consideration. The only thing that has been considered was how to easily supply the outputs for the specific interface; neither the used business logic can be applied in another domain, nor can the data stored in the database easily be used by other systems.

PS1: don't fall for the trap that your architect argues that this system is just a stand-alone system... what business process is isolated from every other business process?

Data Perspectives

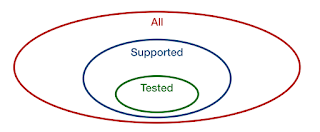

Data needs to be viewed by a system from different angles. Although each perspective (different angle) has similarities with others, it is unique. A perspective gets captured in a data representation. Now we will go into the different perspectives that need to be considered.

Perspective 1: Computation (Business Logic)

To implement the functionality of the system, business logic must be developed. And the #1 question is, how can we implement the business logic in only a limited amount of lines? Because the fewer lines needed, the more easily and less costly you can alter your software (aka: agile software). This is done by introducing an expression language for implementing the business logic.

The related design pattern is called: Domain Model. Key aspect of this pattern is that it combines logic with data. This means that unnecessary plumbing that is not a key feature of the business logic, like loading related record/data will be hidden inside the domain model. This makes it that your domain model can't be stored 1-on-1 in the database, but that you need a mapping to the data representation. Because of this decoupling, the business logic (computation) is now reusable, and can be applied in other/future use cases.

Perspective 2: Data

Consider a legacy system and try to answer the question: "if client X wants its data to be removed after 60 days, what should all be removed?" The data in the database was initially organized according the interfaces (inputs/outputs) of the system. Or when you introduce domain models for computation, there is data co-mingling. And when you look directly from storage perspectives, there might be many issues to understand what to consider, because of: copies in different places, data (de-)normalization, presence of indexes with sensitive information, and more.

Another issue is present when no data perspective is leveraged, that means the interfaces or computation representation is directly mapped onto the storage representation. But if you switch from a JSON model, to relational or graph model, then you need to rewrite all the transformations for each interface and business logic.

PS2: If you assume the logical data model of your data storage system is your data representation (e.g. JSON document/relational data model), then it implies that you are writing such a database system! Needless to say, you are not writing such a system, thus don't be tempted to consider this your solution for your data representation for the data perspective.

The data perspective handles the decoupling of the specific technology to store data, and to govern the data (data governance). In this model, you define Entities. Entities are data units that can be addressed by an identifier. It does not contain any other entity inside itself (not a composite) but rather capture relationships to other entities. Data governance is done on the granularity of entities.

Perspective 3: Storage

This perspective looks into the capabilities of the storage system, and explains how to store your data. There is a mapping between each database of a storage system and the data representation. In other words, each database has a physical data model that needs to be mapped to the logical data model. Therefore most systems have multiple physical data models, for different - but not required - types/domain of the data of a system. Data segregation (logical and physical) are realized by the physical data model.

Best practices try to model the data in such fashion that simple ETL and data administration scripts can run directly on this layer. That means that if you were writing data in serialized binary format specific for the programming language of some business logic, you are doing the wrong thing. Understand that integration is a key part that needs to be considered at all levels.

Also consider the performance (memory, computation and disk) aspects of specific physical representations. Try to find a good trade-off, and that is determining how to model. Thus don't do top down, but rather bottom up design for the mapping between physical and logical data model.

Perspective 4: Interfaces

Where many start off with the interface modeling, and only create a single data representation to use through all different perspectives, this is actually the last representation to create. Based on the interface specifications, you combine the available outputs of the business logic components to serve the data that is needed for realizing an API or (G)UI.

It is a good habit to standardize output formats, fields, authentication, and other aspects of APIs through your whole stack, not a single system. This cross-system/-functional API design can now be easily accommodated, even to legacy systems, by implementing a specific API component (adapter) on top of the system. It takes domain objects and translates them into Data Transfer Objects.

Conclusion

For any system, consider each perspective related to data (computation, data, storage and interfaces) independent. This will yield a normalized result for all your systems, enabling standardization and integration. After having the specific data representations write mappers between:

- storage (physical data model) and data (logical data model) in a store component

- data (logical data model / entities) and computation (domain objects) in a service component - if service specific - or model component - if not service specific

- computation (domain objects) and interfaces (data transfer objects) in an api component

If you want to reduce the amount of representations, you can start with skipping the computation representation, although this will yield in more verbose business logic. If even more abstraction reduction (less representations) is wanted, consider mapping the data perspective directly on your data storage system (no explicit storage perspective/mapper). This will eliminate many performance optimizations, but could still be introduced without extra cost at a later stage.

The interfaces of a system are specific to a set of use cases, while the data stored is company/domain-wide and integrated. Therefore never apply your interface representation directly to your storage system. Doing so, will make your system legacy by design.

Comments

Post a Comment